Just another blog post about AI… and Corona’s approach.

12/3/24 / David Kennedy

2024 may be remembered as the year that artificial intelligence (AI) became everyday language for most people, even if most still don’t know what it is (and is not) or probably realize when they’re interacting with it in some form. In case you’re wondering, this post was 100% human created.

This year may also be the year we remember reaching peak hype. At least my inbox hopes it is – I’m sure I’m not the only one who gets multiple emails a day promising some new platform with AI embedded (or an old platform now rebranded with AI…).

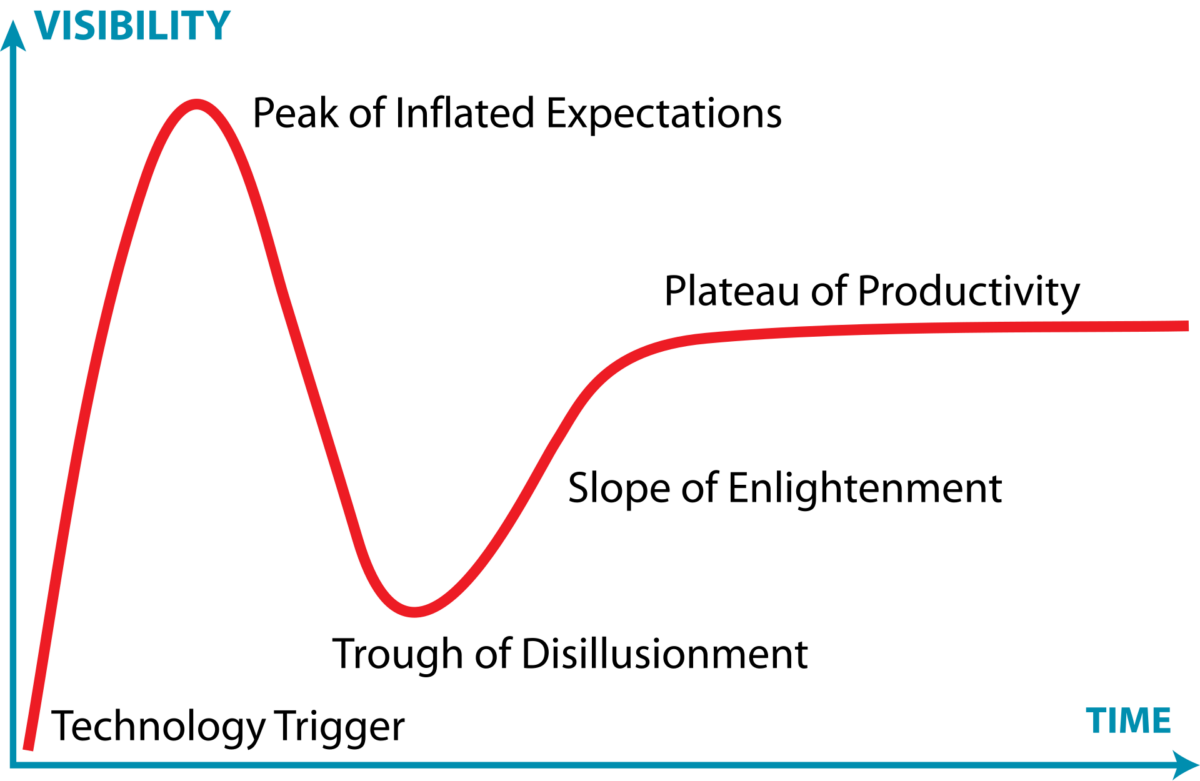

All of this made me think of the Gartner Hype Cycle:

Image from Wikipedia: https://en.wikipedia.org/wiki/Gartner_hype_cycle

My Four Stages of AI

I’ve been thinking about our industry’s own timeline and how this maps to the Hype Cycle.

- AI is new and exciting. I think this really kicked off with Chat GPT and other publicly available models. All of a sudden anyone could control and interact with generative AI. The possibilities seemed endless and so did the risks.

- Peak excitement. Anything that could be (re)branded as AI was, lest it get left behind the new entrants in the field. From mining data to creating synthetic data, copyediting to report writing, there was someone promising to transform how we worked.

- Coming down to earth. But, it didn’t. Some products offered gains, but at best they required heavy handholding and at worst they were just wrong. In a recent industry report (GRIT Report), about half of respondents from full-service research firms completely or mostly agreed that “AI solutions will be a great boon to my business, etc.” Considerably fewer still (about one third) completely or mostly agreed that “AI solutions will lead to fairer decisions” or “I trust people who train data for AI”.

- Slow and steady progress. Tools will keep getting better and we’ll keep getting better at using them. This is the unglamorous phase of adoption.

This is nothing new – we’ve seen it with online research, big data, and more. Real results will eventually show themselves but for now there are probably more empty promises and false starts than industry changing wins.

Where is Corona in all of this?

At Corona, we’re currently somewhere in between states 3 and 4. We’re past the hype, but continuing the work.

We see the potential for AI in our work though we’re still more in the test and evaluate phase. The tools we’re using most are still pieces of private applications that assist with specific tasks like transcription or semantic coding. These are areas that use a disproportionate amount of time and could provide real value to our clients if we can speed them up. However, our clients hire us to gain trusted insights into their data and we have yet to find a tool that reliably can interpret the broader context of data and create meaningful findings.

In some cases, our trials have been laughably bad. In one trial, we asked an AI to restructure some data we had from the Census to be in the format we needed for analysis. We gave it the Excel file, told it what to do, and voilà, it did it. We cheered and thought fondly about our new leisure time ahead of us. But then we looked closer (and honestly, not that close even to start) and something looked off. And then something else looked off. And… It was garbage. We have no idea what it did, but it was far from correct. Back to our old tools for restructuring data for now.

Our Safeguards

We’ve also been putting as much work into protecting data as we have into how we process and analyze it. In the rush to try what’s new, we fear some firms are not taking the steps needed to safeguard respondent or client data. When you upload data, how can the company behind that platform use it? As we explore new tools and evolve in this space, we are guided by these commitments:

- Transparency. We’ll tell you how we’re using AI tools with any data and are open to discussing our use in greater detail.

- Data protection. We will not upload any data gathered on your behalf, or provided by you, to free, public-use AI tools. Only walled-off, private applications will be used. Only data needed for our goals will be uploaded – anything extra, including personally identifiable information (pii) won’t be uploaded.

- Following the law. This may sound obvious, but we won’t upload or use copyrighted material.

What’s your take on AI within your own organization?