25 Years of Change – Demographic Analysis

6/18/24 / Kevin Raines

As Corona Insights arrives at our 25-year anniversary, we’re going to take a look back at the world 25 years ago and examine what has changed in the world of research. This blog post will focus on the evolution of how we gather and analyze demographic data and other data from existing sources.

The key elements of change in this part of our work are the availability and accessibility of data.

Census Data – Increased Availability

Our most commonly used source of demographic data is from the US Census, which we use frequently, both in visible ways and behind the scenes. This arena has changed dramatically in the past 25 years, with data now being released ten times more frequently.

When Corona Insights was founded in 1999, it was a challenging time to do even basic demographic analyses. At that time, the US Decennial Census was our main data source, and as the name implies, it was conducted only in years ending with a zero. So the most recent data available in 1999 was from the 1990 census. Yikes.

This meant that many of our demographic analyses had to use 1990 as a base, and then we had to develop projections forward to the current year, even for basic measures like population. We had to do more complex projections for other measures such as age, income, and myriad other census variables of interest. This added notable time and cost to our studies that made use of these data. When the 2000 US Census was completed, it made our work easier, but even then we had to wait until the data were finalized and released, which took another two years. Therefore, the early years of Corona Insights involved a lot of complex demographic analyses to gather data that today is easily and readily available.

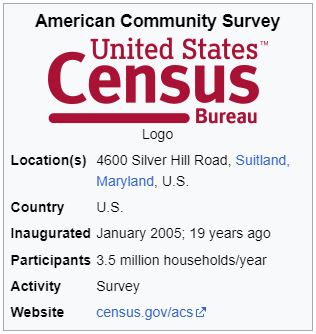

And why is it available today? We are very grateful for the US Census Bureau’s decision in 2005 to separate the Census into two parts: the formal decennial census as required by the US Constitution and a new element, the American Community Survey.

The formal census is still completed every ten years as required, but now collects only basic data as required for the democratic process. In contrast, the American Community Survey contains all of the valuable data that was previously collected only during census years, and it’s conducted annually. This gives us sound and defensible updated data every year, not to mention the potential for trend analyses.

This increased availability also includes new data that didn’t exist in 1999. While the Bureau of the Census has added and deleted various types of data over the years, the net effect is typically the arrival of new data that is relevant to current issues. For example, health insurance types and coverage came available in 2008 as the Affordable Care Act rose as an issue of national discussion, and in 2013 data about internet access came available as this rose as an issue of equity and access to resources.

Census Data – Increased Accessibility

The internet is an amazing place for data. In 1999, when we wanted detailed census data, we had to order it over the phone, and then it came a few weeks later.

In the mail.

On floppy disks.

If you don’t remember what floppy disks are, google them, but this is what they looked like.

Now we can go out and download data as fast as we can figure out what’s available and where it is. And not only that, the Census Bureau has been making more and more back data available, and new analysis tools as well.

The big issue now is keeping track of what data is available and how to obtain it. We invest significant time in exploration of the Census site and experimentation on new ways to obtain and analyze data. That’s a good problem to have, though.

Other Data Sources

The Census isn’t the only game in town, of course. While data from other reputable sources has always been available, it historically faced the same challenges that the Census data had: it took time and work to obtain the data, and it was a big effort to find, catalog, and understand how to access these sources for our clients.

It remains a big effort to understand these sources today, as more data becomes available each year from reputable sources, such as federal agencies and state governments and even local governments. But the key is that the data exists now, and we can now access these data more quickly than in the past. In many instances we have access to more detailed data, or even raw databases to customize our analyses.

Of course, more data also requires more scrutiny. As you might know, not everything on the Internet is true. So our job increasingly entails making sure that the data sources we use are proper and accurate, which generally means assessing the quality of the data source. Some data, even if gathered and posted with good intent, may not be of sufficient quality to trust. Therefore, vetting data and data sources is an increasingly important part of our work.

Increased Data Complexity

As the Internet matured and more data appeared online, we briefly wondered if the need for a research consultant would eventually go away. After all, if people can pull down the data themselves, they may not need us.

However, the challenges of 1999 morphed into new challenges in 2024. A lot of data is available, but in many cases there’s a notable learning curve to access the data, and then a huge learning curve to analyze the data once it’s retrieved. Many raw data sources require statistical weighting before use, and many large data sets require skill in data analysis tools to manipulate. And frankly, while we love working with big data sets, not everyone gets excited about doing that.

And then of course just knowing what data exists is important. As time goes by and the data world gets ever larger, we are building an ever-larger knowledge base of the types of data that we can obtain and analyze. Frankly, a big way that we can value in 2024 is by telling a client that they may not need to pay for a survey to develop statistics because we’ve seen data deep in the storehouse of some government agency.

So What Does This Mean To Our Clients?

Twenty-five years of data evolution have produced strong progress. We can now get more current data than we could back in our inaugural year of 1999, and we can get it faster than we could in the past. The explosion of data availability has added more complexity in terms of knowing where to find data, and also in terms of assessing the quality of data. This requires more proactive attention and resources on our part, but it pays off when we can identify good, cost-effective sources of data to support our clients’ research goals.